The Enduring Power of an Exact Solution: Foundations of Mie Theory

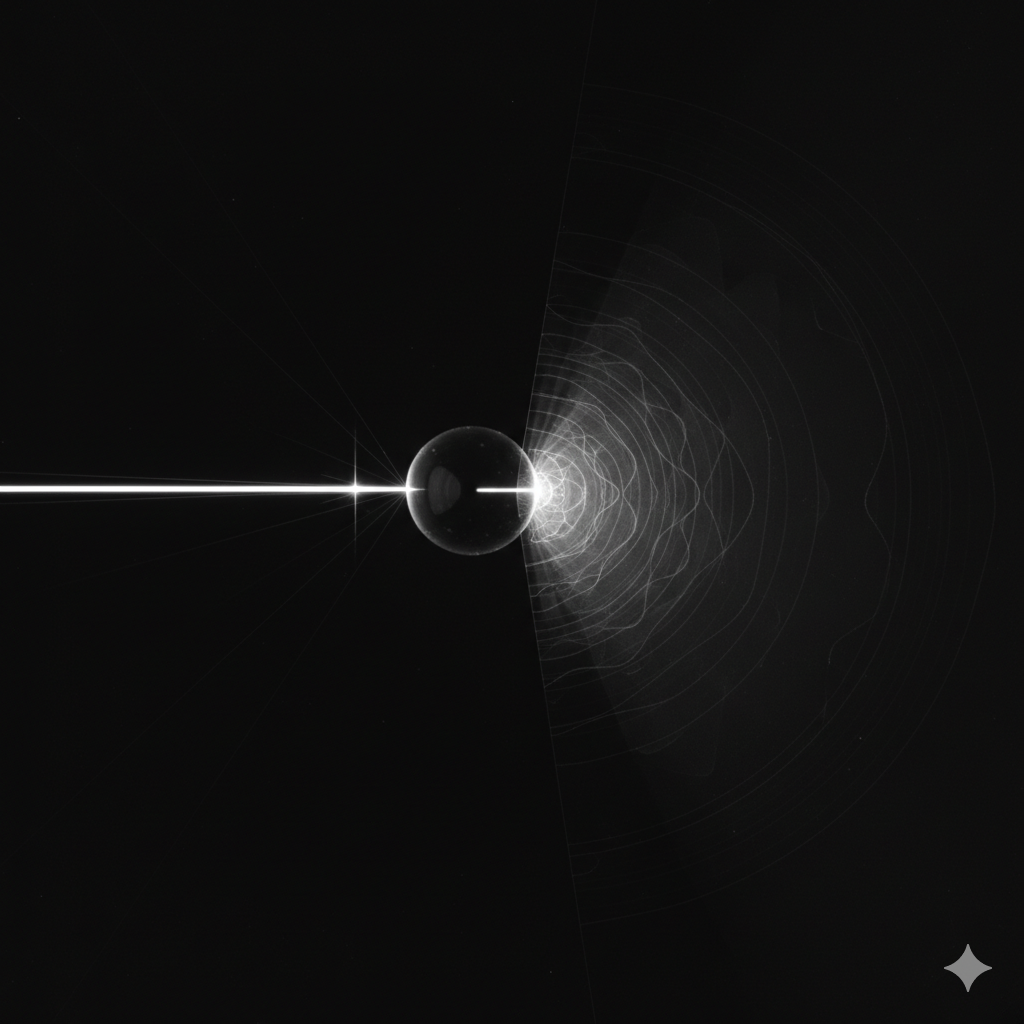

Mie theory stands as a cornerstone of computational light scattering, providing a complete and rigorous analytical solution to Maxwell’s equations for the interaction of an electromagnetic wave with a homogeneous sphere. First published by Gustav Mie in 1908, this formalism is not a historical artifact but the foundational bedrock that bridges the gap between the Rayleigh scattering approximation for particles much smaller than the wavelength of light and the principles of geometric optics for particles much larger. Its enduring relevance stems from its ability to precisely describe scattering phenomena in the critical intermediate regime where particle size is comparable to the wavelength—a condition that characterizes a vast array of systems in science and technology.

The Physical Problem and its Mathematical Formulation

The core problem addressed by Mie theory is the scattering and absorption of an incident plane electromagnetic wave by a single, homogeneous, isotropic sphere of a given radius and complex refractive index, which is embedded within a uniform, non-absorbing medium. The theory is a direct, analytical solution derived from Maxwell’s vector field equations in a source-free medium, a significant achievement at a time when the full implications of Maxwell’s work were not yet universally appreciated.

The solution strategy employs the method of separation of variables in a spherical coordinate system. The incident plane wave, the electromagnetic field inside the sphere, and the scattered field outside the sphere are each expanded into an infinite series of vector spherical harmonics (VSH). This mathematical decomposition is powerful because it separates the radial and angular dependencies of the fields, transforming a complex three-dimensional vector problem into a more manageable set of one-dimensional equations. The unknown expansion coefficients for the scattered and internal fields are then determined by enforcing the physical boundary conditions at the surface of the sphere—namely, that the tangential components of the electric and magnetic fields must be continuous across the interface.

Key Parameters and Outputs of a Mie Calculation

The entire physical interaction is governed by a small set of well-defined inputs that describe the particle, the medium, and the light. From these, the theory produces a complete description of the particle’s optical signature.

Inputs: The fundamental inputs for a Mie calculation are:

- The particle’s radius, a.

- The complex refractive index of the particle, ms=ns+iks.

- The real refractive index of the surrounding medium, nm.

- The wavelength of the incident light in vacuum, λ.

These are typically combined into two critical dimensionless parameters:

- Size Parameter (x): Defined as x=2πanm/λ, this parameter represents the ratio of the particle’s circumference to the wavelength of light within the medium. It is the primary determinant of the scattering regime (Rayleigh, Mie, or geometric).

- Relative Refractive Index (m): Defined as m=ms/nm, this complex value quantifies the optical contrast between the particle and its surroundings. The real part influences the phase velocity of light within the particle and thus governs refraction, while the imaginary part (the absorption index) dictates the degree to which electromagnetic energy is absorbed and converted into heat.

Outputs: The solution of the boundary value problem yields several key outputs:

- Mie Coefficients (an,bn): These are the complex-valued expansion coefficients for the scattered field, calculated for each multipole order n (where n=1 corresponds to the dipole, n=2 to the quadrupole, and so on). They are expressed in terms of Riccati-Bessel functions of the size parameter and the relative refractive index. These coefficients contain all the physical information about the interaction. Even today, the deep physical origins of their resonant behavior remain an active area of research.

- Cross-Sections (σ) and Efficiency Factors (Q): The primary physical observables are the cross-sections for scattering (σs), absorption (σa), and extinction (σext=σs+σa). A cross-section has units of area and represents the effective area the particle presents to the incident wave for that particular process. It is often convenient to express this in a dimensionless form as an efficiency factor, Q, by dividing the cross-section by the particle’s geometric cross-sectional area, πa2. These efficiencies are calculated by summing the contributions from all multipole orders, weighted by the Mie coefficients: Qs=x22n=1∑∞(2n+1)(∣an∣2+∣bn∣2)Qext=x22n=1∑∞(2n+1)Re{an+bn}

- Amplitude Scattering Matrix and Phase Function: For a spherical particle, the relationship between the incident and scattered electric field components is described by a simple diagonal matrix containing two complex functions, S1(θ) and S2(θ), which depend on the scattering angle θ. These functions determine the amplitude, phase, and polarization of the scattered light in any direction. The phase function, which describes the angular distribution of scattered intensity, is derived from these matrix elements.

The Spectrum of Scattering: Situating Mie Theory

The power of Mie theory is best understood by seeing it as a master theory that unifies different scattering regimes. Its mathematical formalism naturally simplifies to well-known approximations in the appropriate limits. This demonstrates that a single, well-constructed Mie code can serve as a versatile tool for an enormous range of physical problems, from modeling nanoparticles to raindrops, simply by varying the input parameters. The table below provides a comparative framework.

(more…)